AI video generation introduces uncertainty: users don’t know exactly what they’ll get, how long it will take, or how to fix results.

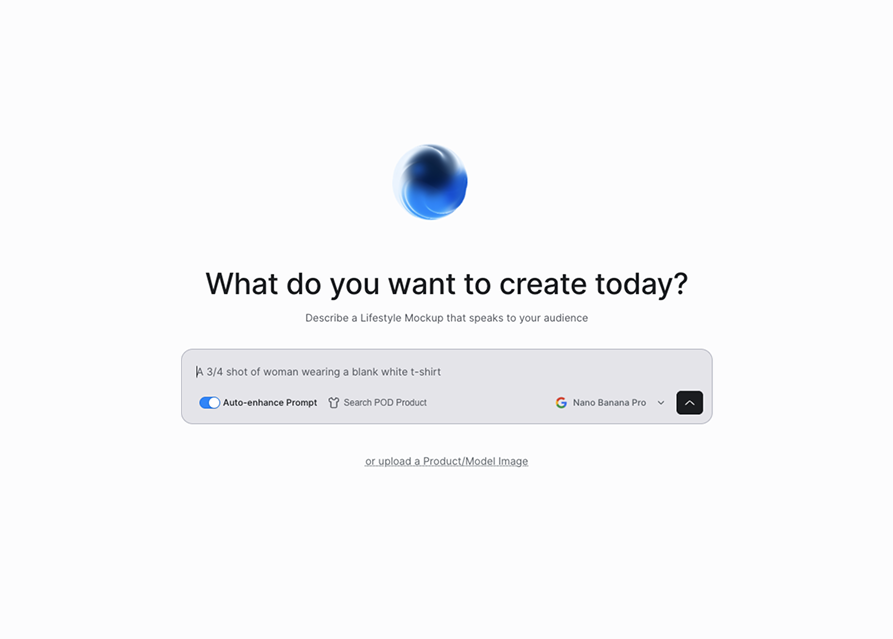

At the same time, the feature needed to feel fast, accessible, and aligned with existing creation workflows, while operating under real technical and performance constraints.

Users struggled with:

This uncertainty reduced adoption and confidence in the feature.

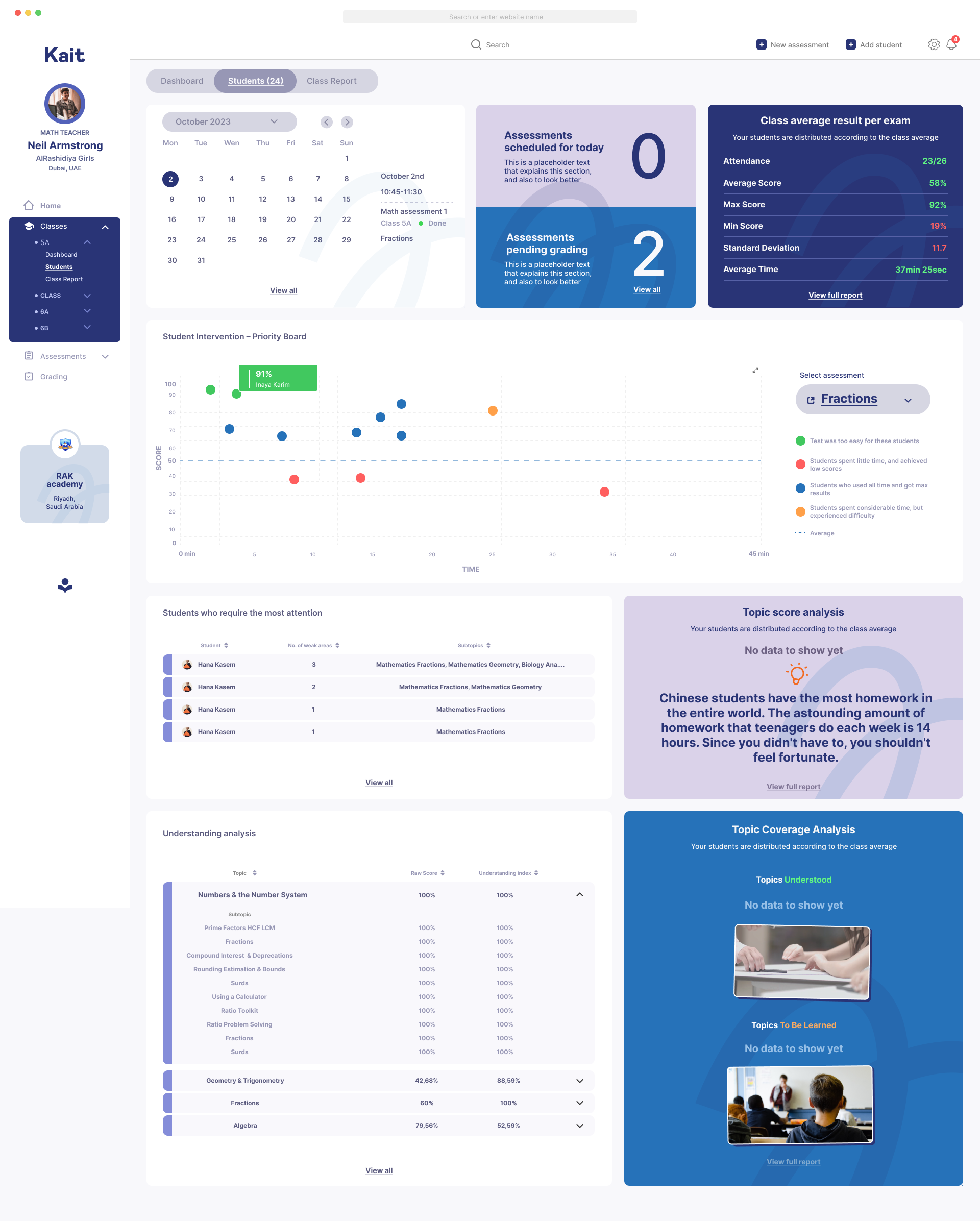

I treated the AI as a collaborator, not a black box.

The strategy focused on setting expectations early, showing progress clearly, and allowing users to guide outcomes without requiring technical knowledge.

Early concepts were validated quickly, then refined into production-ready designs.

I worked closely with engineering to align UX with AI capabilities and constraints.

Designs were created in Figma, reviewed alongside technical logic, and implemented with clear boundaries between user control and automated behavior.

The feature became easier to understand and more approachable for first-time users.

Adoption increased as users gained confidence in the AI output, and iteration became faster when results needed adjustment.

Trust is the core UX problem in AI features.

If iterating further, I’d add more contextual previews and learning from prior generations to personalize results over time.

This case demonstrates my ability to design AI-native user experiences, balance automation with user control, and turn complex technical systems into clear, usable products.