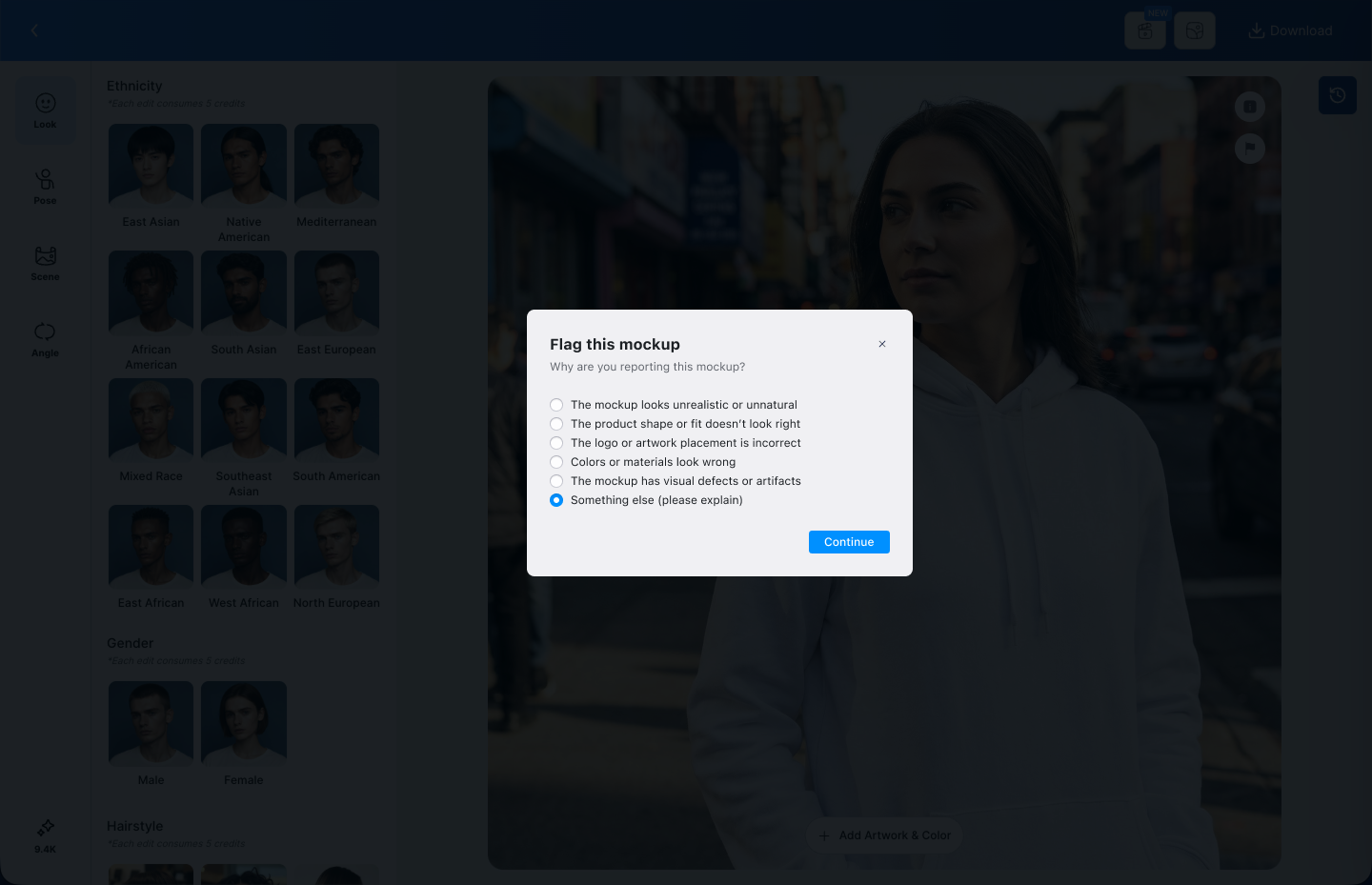

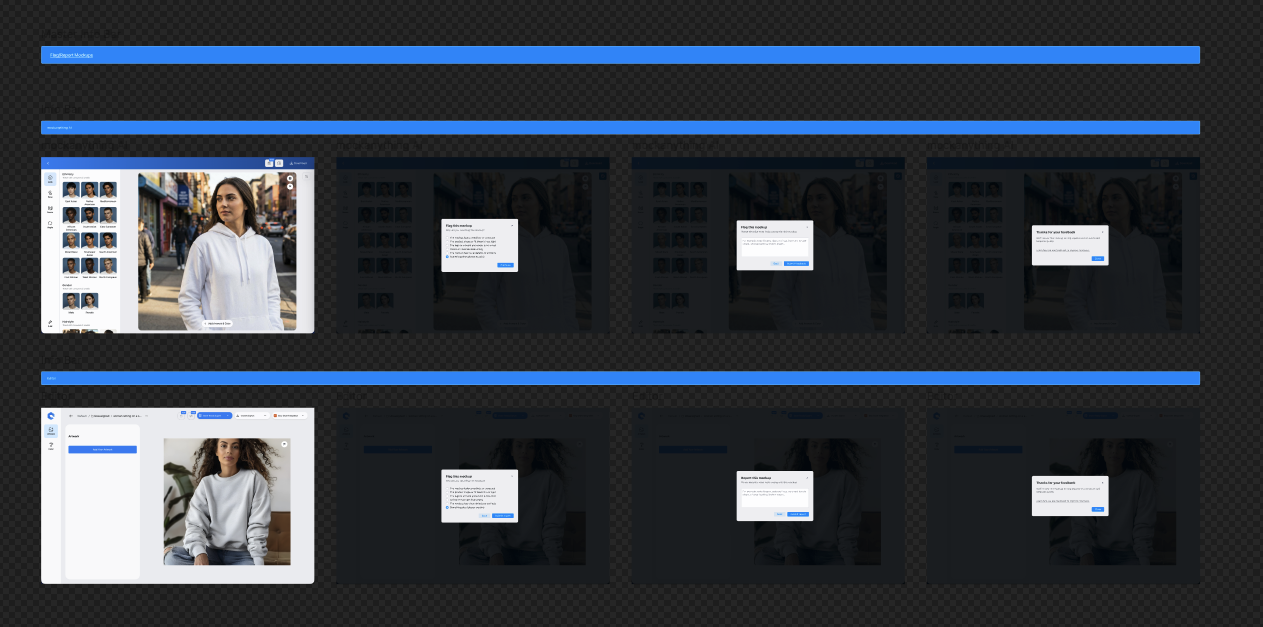

Dynamic Mockups relies on AI-assisted generation and complex rendering logic, which occasionally produces incorrect or low-quality results.

The challenge was to let users report issues without adding friction, blame, or support overhead, while ensuring feedback could be acted on internally.

Constraints included:

Users had no clear way to report bad outputs when something went wrong.

This resulted in:

The product needed a lightweight reporting mechanism that benefited both users and the platform.

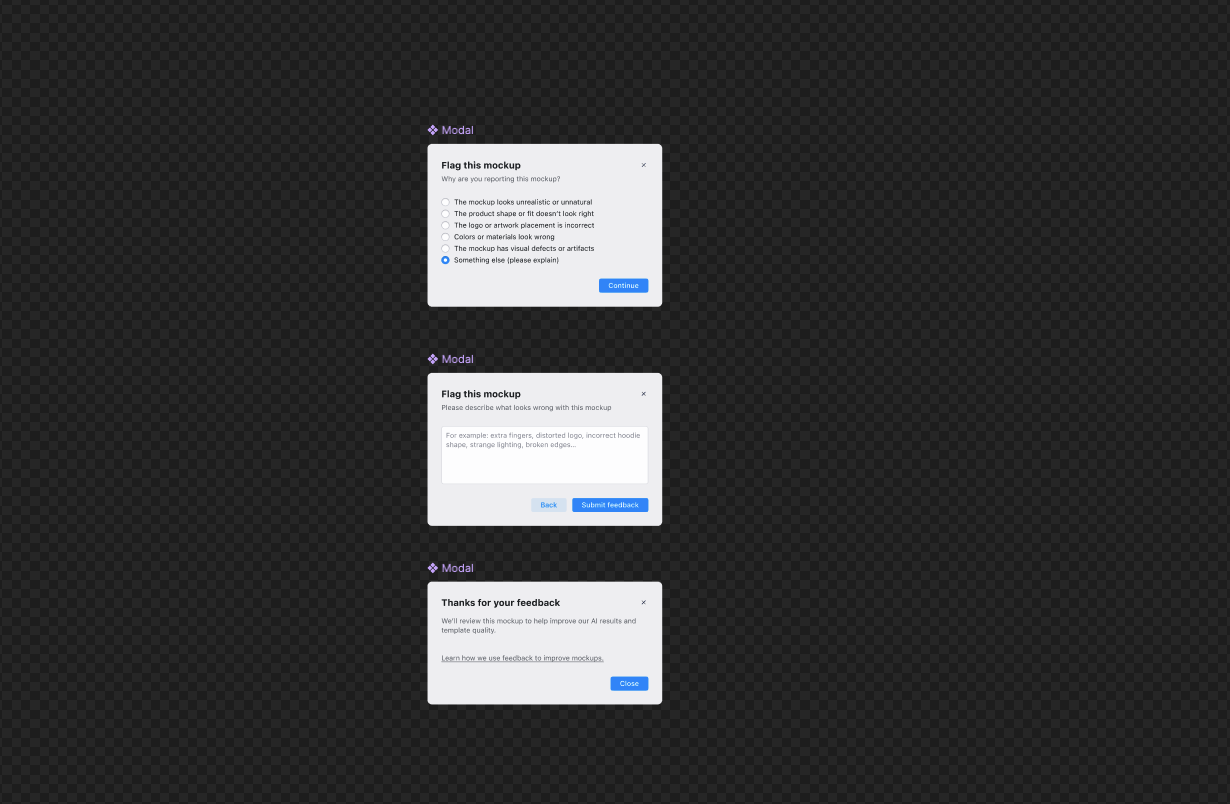

I approached this as a quality feedback system, not a support feature.

The goal was to normalize failure as part of AI creation, while making it clear that feedback directly improves the product.

The experience was designed to be optional, fast, and respectful of the user’s time.

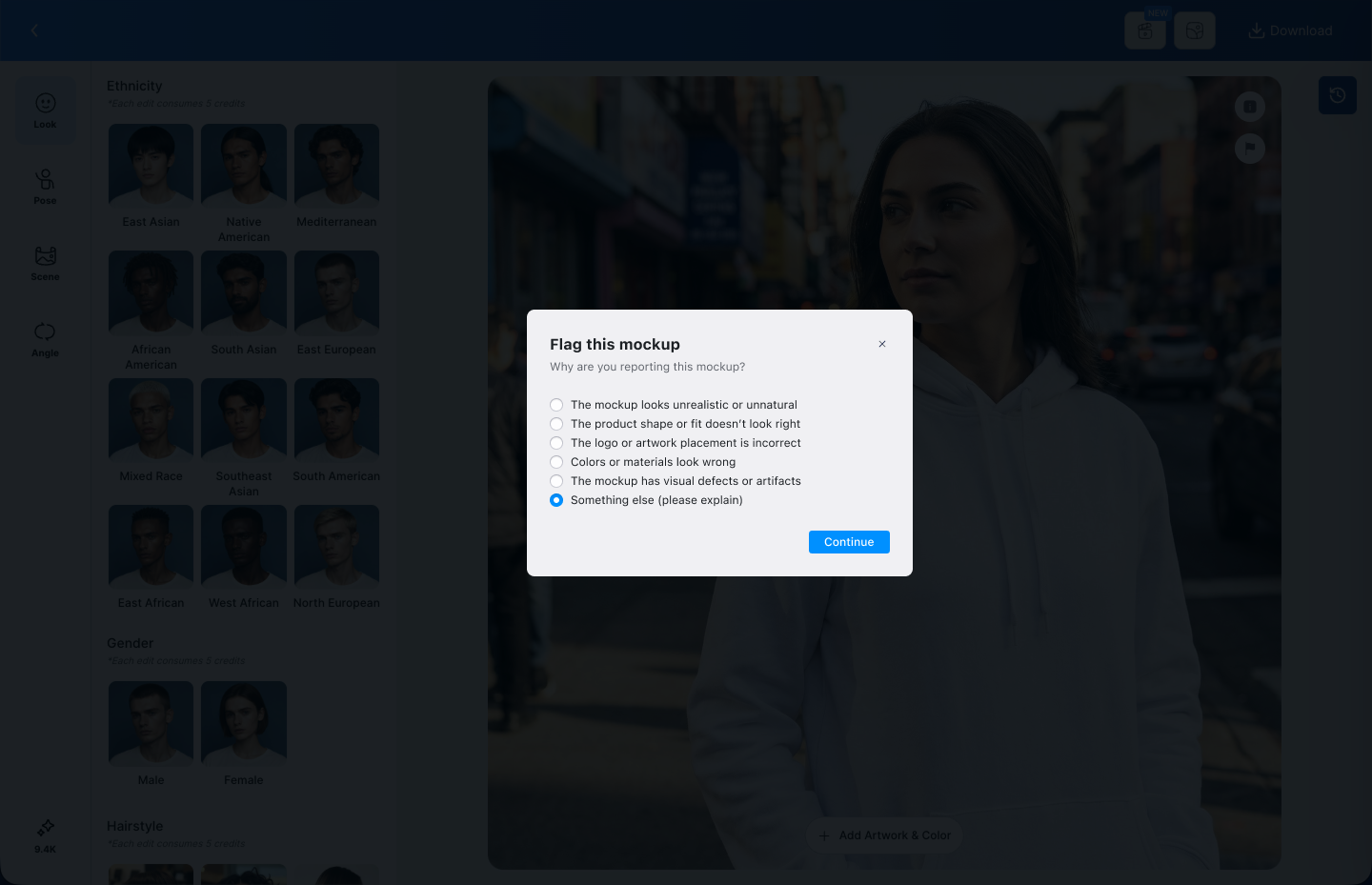

I worked closely with product and engineering to align UX decisions with real operational workflows.

The feature was designed in Figma, reviewed against internal processes, and implemented to support manual review while remaining scalable for future automation.

Users gained a clear way to report issues, increasing trust in the platform.

Internally, the team received structured, high-quality feedback that could be reused across multiple improvement areas, leading to better outputs over time.

Users are willing to help improve AI systems when the cost is low and intent is clear.

Next, I would close the loop by notifying users when a reported issue has been resolved, reinforcing the value of their feedback.

This case demonstrates my ability to design feedback-driven quality systems, align user trust with internal operations, and improve AI products through thoughtful, scalable UX decisions.