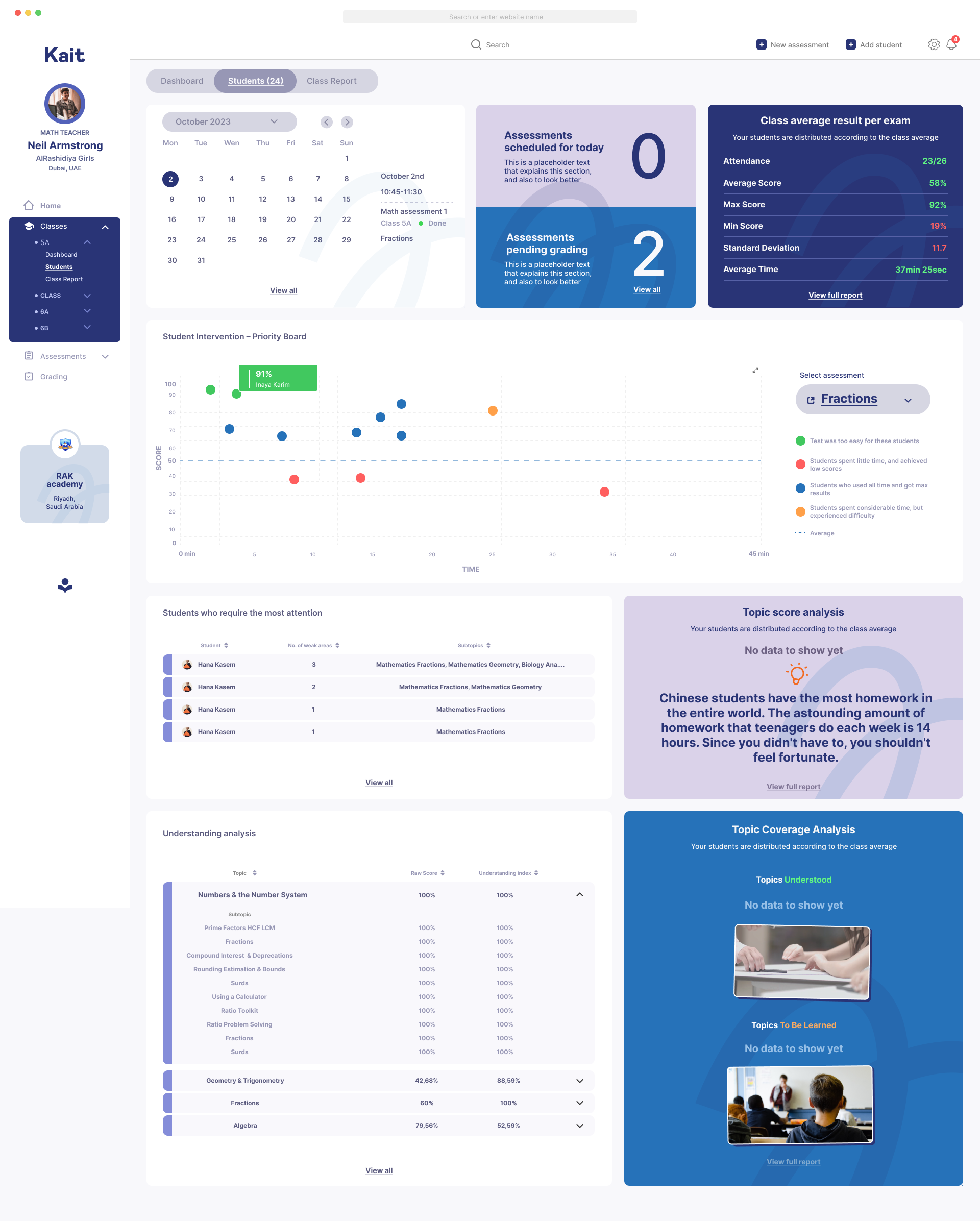

Kait processed large volumes of student performance data across subjects and skill levels.

The system needed to present insights in real time, work across devices, and remain understandable for both students and educators, while avoiding traditional grading pressure.

Students and teachers lacked:

Raw data existed, but it wasn’t usable in everyday learning.

I focused on progress over grades.

The strategy was to replace static scores with dynamic feedback that showed improvement, momentum, and direction, making learning feel achievable and continuous.

I collaborated closely with engineering and data teams to align visual feedback with performance logic.

Design decisions ensured that insights remained clear and consistent even as underlying models evolved.

The feedback loop improved student engagement and encouraged consistent practice.

Teachers gained clearer insight into performance trends, while students felt more in control of their learning.

Feedback works best when it’s timely and framed positively.

In future iterations, I’d further personalize feedback messaging based on student behavior patterns.

This case demonstrates my ability to design data-driven feedback systems, balance motivation with performance tracking, and scale human-centered UX across large learning platforms.